How enterprise companies are using agentic AI: The state of the art for 2026

Head of AI Transformation

Tags

Share

The map is not the territory. — Alfred Korzybski

Forward: The year of operational reality

As we stand in early 2026, the speculative fever dream of the "AI takeover" has finally broken. The prediction markets of 2023 and 2024 that bet on the extinction of the human contact center agent have settled into what Forrester Research accurately termed the year of "gritty, foundational work." The "Magic Bullet" phase is over; the operational reality phase has begun.

For the Fortune 500, the strategic question has shifted from "How do we deploy a chatbot?" to "How do we orchestrate a hybrid workforce?" This is as much semantics as it is a fundamental architectural pivot. The early experiments with "Overlay AI" - independent bot layers bolted onto legacy telephony and CRM systems - have largely hit a ceiling of complexity and latency.

From my vantage point leading AI transformation at Dialpad, the industry consensus is clear: Agency requires native intelligence. An AI agent cannot function effectively as an external contractor to the enterprise technology stack. Instead it must be an integrated employee.

This blog post outlines the state of the art for enterprise agentic AI in 2026. It argues that success is no longer defined by the raw IQ of the Large Language Model (LLM) but by the sophistication of the orchestration layer - the central nervous system that connects the AI brain to the hands of enterprise systems and the voice of the customer.

I. The collapse of the "zero-touch" myth

Over the last 3 years, a pervasive narrative suggested that generative AI would lead to the rapid extinction of the traditional contact center. Prediction markets and tech evangelists forecasted that by 2026, the majority of customer service interactions would be handled by autonomous systems without human oversight.

The reality of 2026 is far more nuanced.

Gartner's strategic prediction has largely held true: by 2028, none of the Fortune 500 companies will have fully eliminated human agents from their service operations. The "Agentless Enterprise" proved to be a mirage, dissolved by the complexity of edge cases and the enduring human preference for empathy in high-stakes situations.

The hybrid design target

We are not in the business of arguing that "AI won't work." We are AI maximalists. We believe that Agentic AI will handle most routine interactions. But everyone who has actually run AI in production for CX - including us and our most advanced customers - has learned the same hard truths:

The ceiling of autonomy: Even the best systems hit a ceiling around the 70–80% autonomous resolution band for well-defined use cases. The remaining 20–30% involves ambiguity, edge cases, missing data or high emotion.

The "no robots" segment: A meaningful chunk of customers - especially in banking, healthcare, and insurance - will never want to talk to an AI. You cannot "optimize them away" without burning trust.

The brand moment: That 20% of complex calls + the "no AI, please" segment is where your brand lives. It is where churn happens, where VIP relationships are forged and where legal risk sits.

If your architecture treats these moments as "failures" of the AI system, you will fail at exactly the moments that matter most.

The prevailing operating model in 2026 is collaborative intelligence. We have moved from a "factory model" (scripts and speed) to a "clinic model." Just as a nurse practitioner (AI) handles routine triage while the surgeon (Human Super Agent) handles the operation, 2026 contact centers are staffed by highly skilled problem solvers supported by massive automated infrastructure.

In this world, human intervention is a feature, not a failure. It is a high-value promotion.

II. The physics of conversation: Solving the latency crisis

As Head of AI Products, I have observed that the single greatest technical barrier to AI adoption in voice channels is not intelligence - it is latency.

In text-based chat, a 3-second delay is acceptable. In voice, a delay of more than 700 milliseconds breaks the illusion of presence. It creates those awkward "crashtalk" collisions where the user assumes the bot didn't hear them and starts speaking again just as the bot answers.

The "Magic Number" for natural conversation is 300-500ms. Achieving this requires a radical rethinking of telephony architecture.

The Overlay Penalty

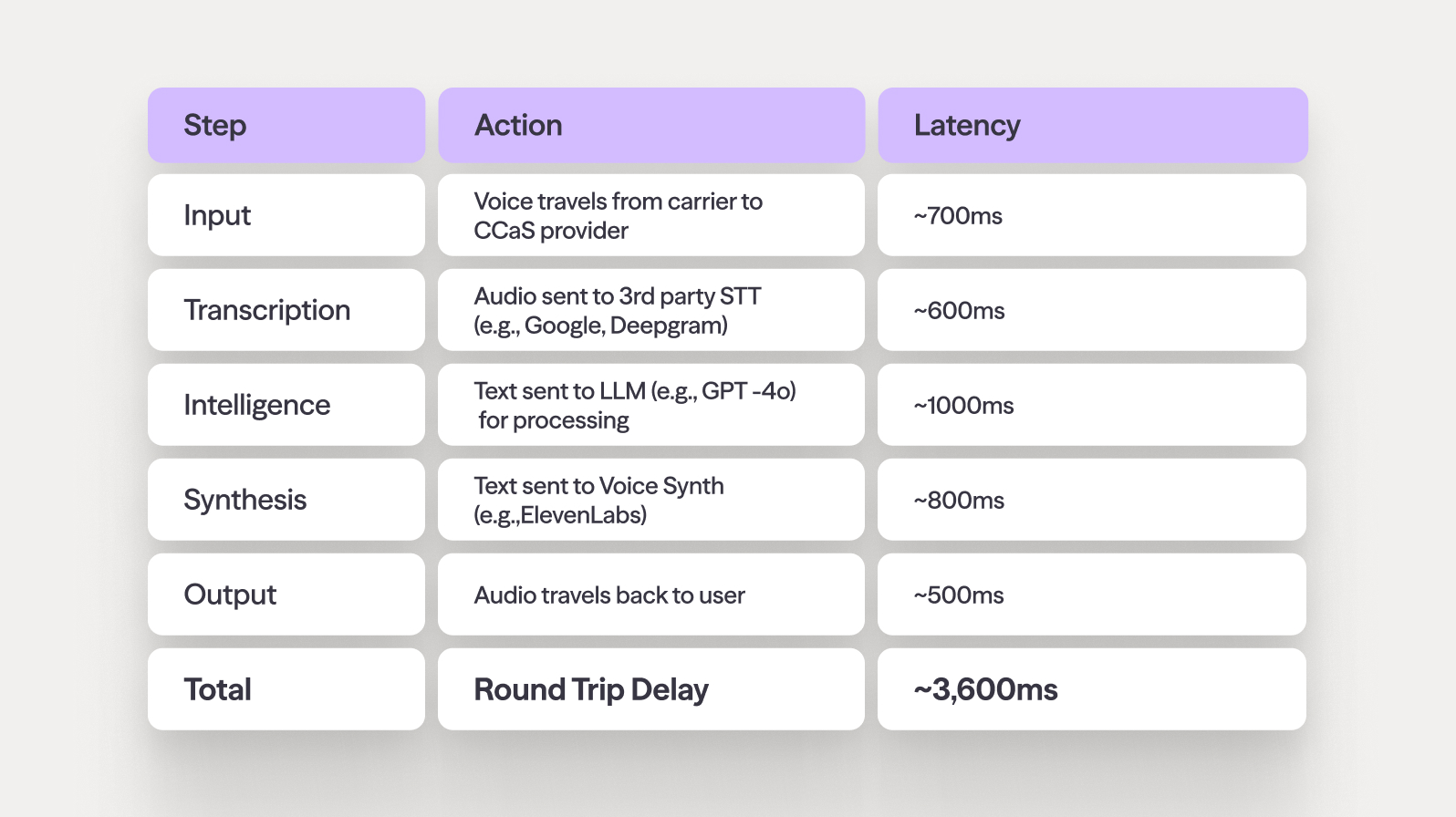

The traditional "Overlay" stack involves a daisy-chain of API calls that inevitably introduces lag:

This 3.6-second delay is the "overlay latency tax." It is why most voice bots feel robotic. They are not listening; they are buffering - and if they aren’t they are trading accuracy for speed.

The native advantage

In 2026, leaders have moved toward native speech-to-speech models. Instead of the "speech-to-text-to-thinking-to-text-to-speech" pipeline, the model ingests audio tokens directly and outputs audio tokens.

Because the model "hears" the audio directly, it detects tone. It knows the difference between a sarcastic "Great..." and a genuine "Great!" - a nuance lost entirely in text-only pipelines. More importantly, by eliminating the intermediate steps, native platforms can achieve turn-taking speeds of under 1500ms, approaching human-level conversational fluidity.

This is why we argue that you cannot simply "buy" an AI agent and stick it on top of a 20-year-old phone system. The physics don't work. To win in voice AI, you must own the network, the transcription, and the intelligence in a single, unified stack.

Native platforms can achieve turn-taking speeds of under 1500ms, approaching human-level conversational fluidity.

III. The fragmentation tax: Why "overlay" is a trap

Beyond latency, the second silent killer of AI ROI is the fragmentation tax.

In the rush to adopt AI in 2024, many companies purchased point solutions: a chatbot for the website, a voice bot for the IVR and a separate CCaaS platform for the humans. They built an architecture of overlay AI - smart skins on top of legacy stacks.

This approach creates a disjointed nightmare:

Context loss: The website bot doesn't know the customer just called the voice bot. When the voice bot transfers to a human, the transcript is lost, forcing the customer to repeat their story. "I already told the machine that..." is the most hated phrase in customer service.

Data silos: You end up with two sets of analytics that never line up. The AI vendor reports "80% Deflection," while the Contact Center VP reports "Rising Complaints."

Vendor finger-pointing: When call quality drops, the AI vendor blames the carrier, the carrier blames the CCaaS and the customer is stuck in the middle.

"AI all the way down"

The Dialpad position - and the direction of the mature market - is native intelligence.

We don't sit on top of the stack. We are the stack. We own the network (telephony), the contact center (CCaaS) and the agentic intelligence.

Because we own infrastructure + intelligence in one system:

Zero-loss handoff: When AI hands off to a human, it’s the same call, the same thread, the same data plane. The human sees the live transcript, recap and key moments before they accept the call.

Unified memory: If the customer told the bot "I'm moving to Chicago," the human agent's screen automatically updates the address field.

Single governance: You set the PII redaction rules once, and they apply to both the AI and the human.

Others add AI on top. We are AI all the way down. This distinction is the difference between a science experiment and a scalable enterprise solution.

IV. The death of AHT: New metrics for a new era

For decades, the contact center was managed by Average Handle Time (AHT). The goal was simple: get the customer off the phone as fast as possible.

In an agentic AI world, managing by AHT is disastrous.

Here is the math:

The AI handles all the short, simple calls (password resets: 2 minutes).

The humans are left with only the messy, complex calls (disputes: 20 minutes).

If you look at a traditional dashboard, it looks like human productivity has plummeted. Human AHT skyrockets. But in reality, value density has increased. The humans are doing the work that only humans can do.

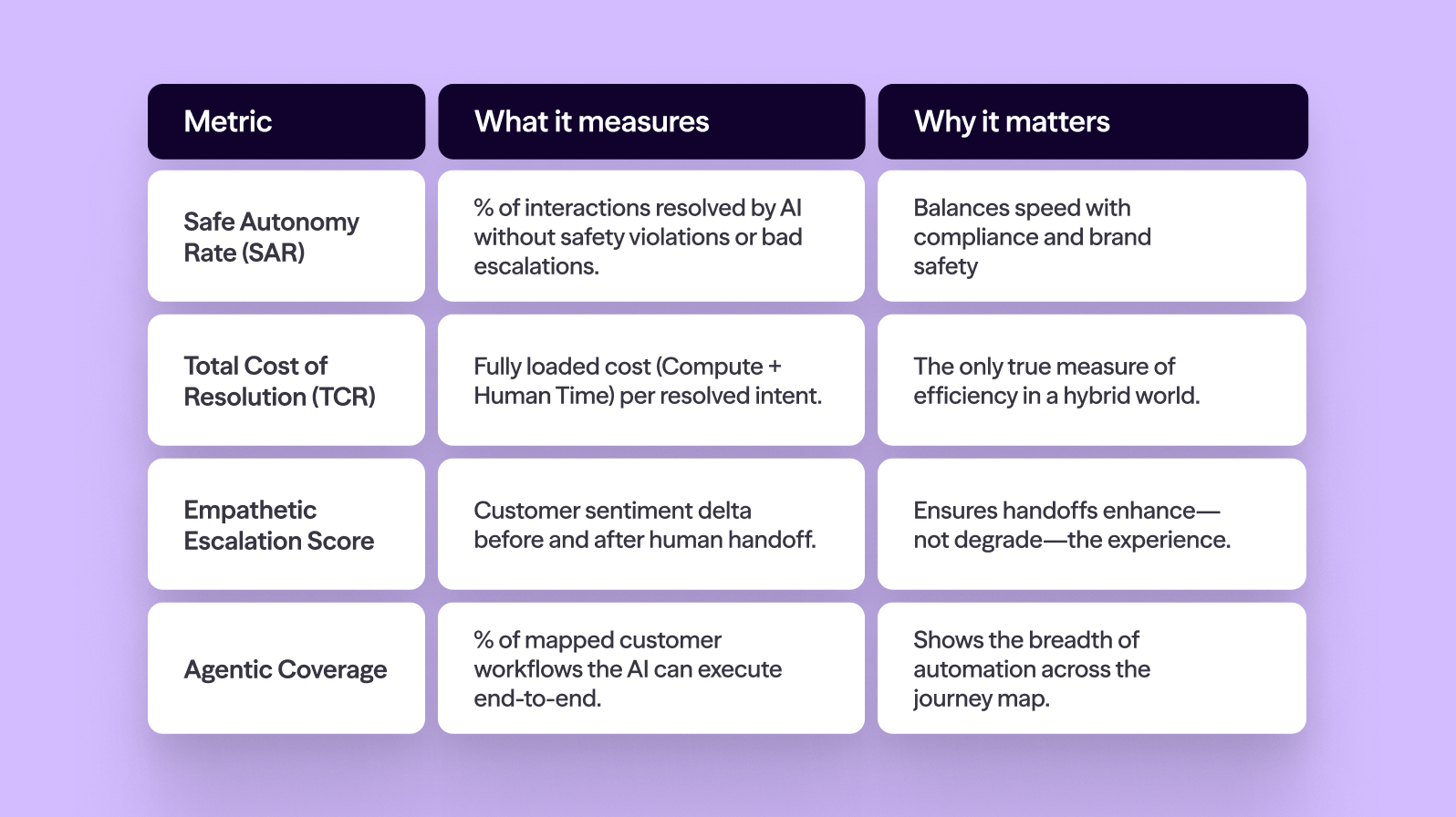

The new scorecard

Enterprises in 2026 are adopting a new set of metrics to evaluate the hybrid workforce:

1. Safe autonomy rate (SAR)

Instead of "Deflection Rate" (which can just mean "we made it hard to reach a human"), SAR measures the percentage of interactions handled by AI without violating safety guardrails or triggering a "bad" escalation. It penalizes the AI for "unsafe" successes where the customer was confused or misled.

2. Total Cost of Resolution (TCR)

This captures the aggregate cost (Compute Cost + Software License + Human Time) to solve a specific intent.

Example: A "Returns" intent might have a TCR of $0.50 (pure AI). A "Warranty Claim" intent might have a TCR of $15.00 (Human + AI Assist). This granular visibility drives investment decisions.

3. Empathetic escalation score

Using native AI, every call is transcribed and analyzed for sentiment. We measure the delta during the handoff. Did the customer feel relieved when they reached the human? A successful handoff is one where the customer feels promoted to an expert, not dumped into a queue.

V. The human-AI workforce: Rise of the "super agent"

As AI absorbs the routine 80% of volume, the entry-level, script-reading contact center agent is becoming an endangered species. The new human archetype is the "super agent."

These are professionals with high emotional intelligence (EQ), critical thinking, and technical problem-solving skills. They are empowered to negotiate, override policies (within limits) and act as brand ambassadors.

New operational roles

The rise of agentic AI has spawned entirely new job categories within the enterprise support organization:

The conversation designer: Evolved from UX writers, they design the "personality" and turn-taking logic of the AI, focusing on "repair strategies"—what the AI says when it fails.

The AI operations lead: The "Floor Manager" for the digital workforce. They monitor real-time dashboards for "hallucination rates" and make the operational decision to pull a malfunctioning agent offline.

The knowledge manager: In the era of RAG (Retrieval Augmented Generation), the knowledge base is the AI's brain. If the documentation is outdated, the AI will confidently lie. This role is now critical "knowledge ops."

VI. An agentic action plan: From pilot to scale

Building agentic AI is less about launching a moonshot and more about disciplined iteration. Based on successful deployments across our enterprise customer base, here is the playbook for 2026.

Phase 1: The "narrow wedge" (0–30 Days)

Get your CX, Ops, and IT leads into a room and ask one question: Which three customer queries chew through the most agent minutes while following a predictable, rules-based path? Pick one. Whiteboard the workflow. Spin up a read-only agent that can answer questions but cannot touch the database. Instrument everything.

Phase 2: The "write-back" (1–3 Months)

Once your read-only accuracy breaks 90%, unlock write-back capabilities. Give the agent the keys to the API. Let it issue the refund. Let it rebook the flight. Crucial step: Implement a semantic middleware layer. This is a safety valve that validates every API call against strict schemas before it touches your record system, ensuring the AI doesn't hallucinate a transaction.

Phase 3: The "seamless handoff" (3–6 Months)

Extend the agent to the voice channel. This is where the native architecture pays off. Ensure that when the AI detects frustration (via sentiment analysis) or complexity (via intent scoring), it passes the full context to a human super agent. Measure the empathetic escalation score. If customers are repeating themselves, you have failed.

Phase 4: Institutional governance (6+ Months)

Form an AI governance board. Move from reactive service to proactive agency. Don't wait for the customer to call. Have the agent reach out: "Hi, I noticed your flight was delayed. Would you like me to book the next connection?"

Conclusion: The orchestrated enterprise

The "AI revolution" is no longer a future event; it is the current operating condition of the modern enterprise. We have moved past the naive optimism of "replacing everyone" and the cynical pessimism of "it's just a hype cycle."

In 2026, the competitive advantage does not belong to the company with the best AI model - models are commodities. It belongs to the company with the best orchestration.

It belongs to the company that has chosen a native architecture to eliminate the friction of latency and fragmentation. It belongs to the company that understands that the AI agent and the human agent are on the same team, sharing the same brain, serving the same customer.

The tools are ready. The workforce is adapting. The only question remaining is whether your architecture can support the speed of your ambition.

Appendix: metric definitions

Native architecture for collaborative intelligence

Explore how Dialpad’s platform-native agentic AI capabilities can transform your CX operation.